VMotion fails with “Source detected that destination failed to resume”

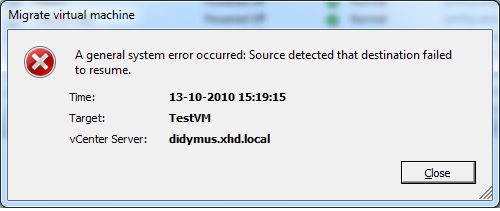

I recently came across a problem where VMotions would fail at 82%. The failure would be “A general system error occurred: Source detected that destination failed to resume”. So what’s wrong here?

What’s wrong?

The environment is based on NFS storage. Looking closer in the logs, I found that the VMotion process actually appears to try to VMotion a VM from one datastore to another datastore, which is kind of impossible unless we were doing a storage VMotion.

Now for the weirder part: Looking at vCenter, all NFS mounts appear to be mounted in exactly the same fashion. But looking at the NFS mounts through the command line revealed the problem: Using esxcfg-nas -l the output of the nodes showed different mount paths! The trouble lies in the name used for the NFS storage device during the addition of the shares to ESX: IP address, shortname, FQDN, even using different capitalization causes the issue (!).

Its Lab Time!

I’m not sure this is a bug or a feature within VMware. Fact is, that from vCenter I have found no way to spot this issue (NAS mounts do not show any device ID anywhere in the config). Funny, vCenter sees the differences in the NFS box name somehow on the various hosts, and changes all NAS box names to the one you most recently messed with in vCenter! This is very dirty indeed.

Potentially you could spot this situation: If you perform a refresh on the storage of an ESX node (under configuration->storage), all other nodes will follow to display the mount name used by the recently refreshed host! If you then proceed to refresh another ESX host’s storage (which uses a different name to mount to the NFS box), the mount names of all other ESX nodes in the cluster will change again to the name the host last refreshed has as a mount name! Only when you use the commandline (vdf -h or esxcfg-nas -l you can spot the difference more easily.

In vCenter:

As you can see, both of my homelab hosts are happily connected to exactly the same nas device, namely “xhd-nas01” (by the way ‘xhd’ comes from http://.www.xhd.nl, my other hobby 🙂 and yes, my two ESX nodes are called “Terrance” and “Phillip” 🙂 ).

But when looking from the console, I see this:

[root@Terrance ~]# esxcfg-nas -l

nas01_nfs is /nfs/nas01_nfs from xhd-nas01 mounted

[root@Phillip ~]# esxcfg-nas -l

nas01_nfs is /nfs/nas01_nfs from XHD-NAS01 mounted

As you can see, I used the shortname for the NAS, but I used capitals for one of the mounts. This in turn is enough to change the device ID of the NAS share:

[root@Phillip ~]# vdf -h /vmfs/volumes/nas01_nfs/

Filesystem Size Used Avail Use% Mounted on

/vmfs/volumes/eeb146ca-55f664e9

922G 780G 141G 84% /vmfs/volumes/nas01_nfs

[root@Terrance ~]# vdf -h /vmfs/volumes/nas01_nfs/

Filesystem Size Used Avail Use% Mounted on

/vmfs/volumes/22b2b3d6-c1bff8b0

922G 780G 141G 84% /vmfs/volumes/nas01_nfs

So in fact, the Device IDs really ARE different! Everything works, right until you try to perform a VMotion. Then you encounter this error:

So how to solve the problem?

If you have the luxury of being able to shut your VMs: Shut them, remove the VMs from inventory, remove the NAS mounts from your hosts, then reconnect the NAS mounts (and this time using the exact same mounthost name!). After that, you can add the VMs back to your environment and start them again.

If shutting your VMs is not that easy, you could consider creating new NAS mounts (or find some other storage space), and use storage VMotion to get your VMs off the impacted NAS mounts. Then remove and re-add your NFS stores and use storage VMotion to move the VMs back again.

In my testlab I created an additional NFS mount, this time on both nodes using the same name (FQDN in this case):

[root@Terrance ~]# esxcfg-nas -l

nas01_nfs is /nfs/nas01_nfs from xhd-nas01 mounted

nas01_nfs02 is /nfs/nas01_nfs02 from xhd-nas01.xhd.local mounted[root@Phillip ~]# esxcfg-nas -l

nas01_nfs is /nfs/nas01_nfs from XHD-NAS01 mounted

nas01_nfs02 is /nfs/nas01_nfs02 from xhd-nas01.xhd.local mounted

And you can see this time the device IDs really ARE equal:

[root@Terrance ~]# vdf -h /vmfs/volumes/nas01_nfs*

Filesystem Size Used Avail Use% Mounted on

/vmfs/volumes/22b2b3d6-c1bff8b0

922G 780G 141G 84% /vmfs/volumes/nas01_nfs

/vmfs/volumes/5f2b773f-ab469de6

922G 780G 141G 84% /vmfs/volumes/nas01_nfs02[root@Phillip ~]# vdf -h /vmfs/volumes/nas01_nfs*

Filesystem Size Used Avail Use% Mounted on

/vmfs/volumes/eeb146ca-55f664e9

922G 780G 141G 84% /vmfs/volumes/nas01_nfs

/vmfs/volumes/5f2b773f-ab469de6

922G 780G 141G 84% /vmfs/volumes/nas01_nfs02

After a storage VMotion to this new NFS store the VM VMotioned without issue… Problem solved!

LinkedIn

LinkedIn Twitter

Twitter

Thanks for this article! I had the exact same problem, and this helped me fix it! Thank you!

Thanks for this post, it helped me fixing this issue too.

You can also set the vSphere host in maintenance mode and unmount the NFS Datastore. Right after the unmount you can mount the Datastore again. After this remount action I got the same Device ID back and was able to vMotion the VM.

Thanks for the solution. This worked for us too.

Thanks for this great explanation. I thought I was on to it, but at first I only checked the mounts in the GUI. This blog post explains very well why that doesn’t show the actual misconfiguration.

Will follow your blog posts through Google Reader from now on. Keep up the good work!

/Anders

It’s not just host or case. I got the problem with:

NFS01 is /mnt/vg1/nfs10/NFS10/ from 10.100.100.39 mounted vs

NFS01 is /mnt/vg1/nfs10/NFS10 from 10.100.100.39 mounted

Of course, I named these hosts ESXi1 and ESXi2. I get an “F” for originality.

Thanks for posting this. I know it’s old, but this issue is still in VMware. There is no way to determine if you have this issue unless you use the command line. I had some hosts mounting the nfs storage via IP, and some via hostname.

-Mike