Posts Tagged ‘VMware’

The Dedup Dilemma

Everybody does it – and if you don’t, you can’t play along. What am I talking about? Data deduplication. It’s the best thing since sliced bread I hear people say. Sure it saves you a lot of disk space. But is it really all that brilliant in all scenarios?

The theory behind Data Deduplication

The idea is truly brilliant – You store blocks of data in a storage solution, and you create a hash which identifies the data inside the block uniquely. Every time you need to backup a block, you check (using the hash) if you already have the block in storage. If you do, just write a pointer to the data. Only if you have not got the block yet, copy it and include it into the storage dedup Dbase. The advantage is clear: The more equal data you store, the more you save in disk space. This is, especially in VMware, using equal VMs from templates a very big saving in disk space.

�

The actual dilemma

Certainly a nice thing about deduplication is, next to the large amounts of storage (and associated costs) you save, is that when you deduplicate at the source, you end up only sending new blocks across the line, which could dramatically reduce the bandwidth you need between remote offices and central backup locations. Deduplication at the source also means, you generally spread CPU loads better across your remote servers instead of locally in the storage solution.

Since there is a downside on every upside – Data Deduplication certainly has its downsides. For example, if I had 100 VMs, all from the same template, there surely are blocks that occur in each and every one of them. If that particular block gets corrupted… Indeed! You loose ALL your data. Continuing to scare you, if the hash algorithm you use is insufficient, two different data blocks might be identified as being equal, resulting in corrupted data. Make no mistake, the only way you can be 100% percent sure the blocks are equal, you need a hash number as big as the block itself (rendering the solution kind of useless). All dedup vendors use shorter hashes (I wonder why 😉 ), and live with the risk (which is VERY small in practice but never zero). Third mayor drawback, is the speed at which the storage device is able to deliver your data (un-deduplicated) back to you (which especially hurts on backup targets which have to perform massive restore operations). Final drawback: You need your ENTIRE database in order to perform any restore (at least you cannot be sure which blocks are going to be required to restore a particular set of data).

�

So – should I use it?

The reasons stated above always kept me a skeptic when it came to data deduplication, especially for backup purposes. Because at the end of the day, you want your backups to be functional, and not requiring the ENTIRE dataset in order to perform a restore. Speed can also be a factor, especially when you rely on restores from the dedup solution in a case of disaster recovery.

Still, there are definitely uses for deduplication. Most vendors have solved most issues with success, for example being able to access un-deduplicated data directly from the storage solution (enabling separate backups to tape etc). I have been looking at the new version of esXpress with their PHDD dedup targets, and I must say it is a very elegant solution (on which I will create a blog shortly 🙂

Surviving total SAN failure

Almost every enterprise setup for ESX features multiple ESX nodes, multiple failover paths, multiple IP and/or fiber switches… But having multiple SANs is hardly ever done, except in Disaster Recovery environments. But what if your SAN decides to fail altogether? And even more important, how can you prevent impact if it happens to your production environment?

Using a DR setup to cope with SAN failure

One option to counter the problem of total SAN failure would of course be to use your DR-site’s SAN, and perform a failover (either manual or via SRM). This is kind of a hard call to make: Using SRM will probably not get your environment up within the hour, and if you have a proper underlying contract with the SAN vendor, you might be able to fix your issue on the primary SAN within the hour. No matter how you look at it, you will always have downtime in this scenario. But in these modern times of HA and even Fault Tolerance (vSphere4 feature), why live with downtime at all?

Using vendor-specific solutions

A lot of vendors have thought about this problem, and especially in the IP-storage corner one sees an increase in “high available” solutions. Most of the time relative simple building blocks are simply stacked, and can then survive a SAN (component) failure in that case. This is one way to cope with issues, but it generally has a lot of restrictions – such as vendor lock-in and an impact on performance.

Why not do it the simple way?

I have found that simple solutions are generally the best solutions. So I tried to approach this problem from a very simple angle: From within the VM. The idea is simple: You use two storage boxes which your ESX cluster can use, you put a VMs disk on a LUN on the first storage box, and you simply add a software mirror on a LUN on the second storage. It is almost too easy to be true. I used a windows 2003 server VM, converted the bootdrive to a dynamic disk, and simply added the second disk to the VM, choose “add mirror” from the bootdisk which I placed on the second disk.

Unfortunately, it did not work right away. As soon as one of the storages fails, VMware ESX reports “SCSI BUSY” to the VM, which will cause the VM to freeze forever. After adding the following to the *.vmx file of the VM, things got a lot better:

scsi0.returnBusyOnNoConnectStatus = “FALSE”

Now, as soon as one of the LUNs fail, the VM has a slight “hiccup” before it decides that the mirror is broken, and it continues to run without issue or even lost sessions! After the problem with the SAN is fixed, you simply perform an “add mirror” within the VM again, and after syncing to are ready for your next SAN failure. Of course you need to remember that if you have 100+ VMs to protect this way, there is a lot of work involved…

This has proven to be a simple yet very effective way to protect your VMs from a total (or partial) SAN failure. A lot of people do not like the idea of using software RAID within the VMs, but eh, in the early days, who gave ESX a thought for production workloads? And just to keep the rumors going: To my understanding vSphere is going to be doing exactly this from an ESX point of view in the near future…

To my knowledge, at this time there are no alternatives besides the two described above to survive a SAN failure with “no” downtime (unless you go down the software clustering path of course).

Resistance is ViewTile!

Nowadays, more and more companies realize that virtual desktops is the way to go. It seems inevitable. Resistance is Futile. But how do you scale up to for example 1000 users per building block? How much storage do you need, how many spindles do you need? Especially with the availability of VMware View 3, the answers to these questions become more and more complex.

Spindle counts

Many people still design their storage requirements based on the amount (in GBytes) of storage needed. For smaller environments, you can actually get away with this. It seems to “fix itself” given the current spindle sizes (just don’t go and fill up 1TB SATA spindles with VMs). The larger spindle sizes of today and the near future however, make it harder and harder to maintain proper performance if you are ignorant about spindle counts. Do not forget, those 50 physical servers you had before actually had at least 100 spindles to run from. After virtualization, you cannot expect them all to “fit” on a (4+1) RAID5. The resulting storage might be large enough, but will it be fast enough?

Then VMware introduced the VMmark Tiles. This was a great move; a Tile is a simulated common load for server VMs. The result: The more VMmark Tiles you can run on a box, the faster the box is from a VMware ESX point of view.

In the world of view, there really is no difference. A thousand physical desktops have a thousand CPUs, and a thousand (mostly SATA) spindles. Just as in the server virtualization world, one cannot expect to be able to run a thousand users off of ten 1TB SATA drives. Although the storage might be sufficient in the number of resulting Terabytes, the number of spindles in this example would obviously not be sufficient. A hundred users would all share have to share a single SATA spindle!

So basically, we need more spindles, and we might even have to keep expensive gigabytes or even terabytes unused. The choice of spindle type is going to be the key here – using 1TB SATA drives, you’d probably end up using 10TB, leaving about 40TB empty. Unless you have a master plan for putting your disk based backups there (if no vDesktops are used at night), you might consider to go for faster, smaller spindles. Put finance in the mix and you have some hard design choices to make.

Linked cloning

Just when you thought the equation was relatively simple, like “a desktop has a 10GB virtual drive period”, Linked cloning came about. Now you have master images, replicas of these masters, and linked clones from the replicas. Figuring out how much storage you need, and how many spindles you need just got even harder to determine!

Lets assume we have one master image which is 10GB in size. Per +-64 clones, you are going to need a replica. You can add up to about 4 replicas per master image. All this is not an exact science though; just recommendations found here and there. But how big are these linked clones going to be? This again depends heavily on things like:

- do you design separate D: drives for the linked clones where they can put their local data and page files;

- What operating system are you running for the vDesktops;

- Do you allow vDesktops to “live” beyond one working day (e.g. do you revert to the master image every working day or not).

Luckily, the amount of disk IOPS per VM is not affected by the underlying technology. Or is it? SAN caching is about to add yet another layer of complexity to the world of View…

Cache is King

Let’s add another layer of complexity: SAN caching. From the example above, if you would like to scale up that environment to 1000 users, you would end up with 1000/64 = 16 LUNs, each having their own replica put on there, together with its linked clones. If in a worst-case scenario, all VMs boot up in parallel, you would have enormous amount of disk reads on the replicas (since booting requires mostly read actions). Although all replicas are identical, the SAN has no knowledge of this. The result is, that the blocks used for booting the VMs of all 16 replica’s should be in the read-cache in a perfect world. Lets say our XP image uses 2GB of blocks for booting, you would optimally require a read cache in the SAN of 16*2=32GB. Performance will degrade the less cache you have. Avoiding these worst-case scenarios is another option to manage with less cache of course. Still I guess in a View 3 environment: “Cache is King“!

While I’m at it, I might just express my utmost interest in the development from SUN, their Amber Road product line to be more exact. On the inside of these storage boxes, SUN uses the ZFS file system. One of the things that really could make a huge difference here is the ability of ZFS to move content to different tiers (faster storage versus slower storage) depending on how heavily this content is being used. Add high-performance SSD disks in the mix, and you just might have an absolute winner, even if the slowest-tier storage is “only” SATA. I cannot wait on performance results regarding a VDI-like usage on these boxes! My expectations are high, if you can get a decent load-balance on the networking side of things (even a static load balance per LUN would work in VDI-like environments).

Resistance is ViewTile!

As I laid out in this blog post, there are many layers of complexity involved when attempting to design a VDI environment (especially the storage-side of things). It is becoming almost too complex to use “theory only” on these design challenges. It would really help to have a View-Tile (just like the server-side VMmark Tiles we have now). The server tiles are mostly used just to prove the effectiveness of a physical server running ESX, the CPU, the bus structure etc. A View-Tile would potentially not only prove server effectiveness, but also very much the storage solution used (and the FC- / IP-storage network design in between). So VMware: a View-Tile is definitely on my wish list for Christmas (or should I consider to get a life after all? 😉 )

The VCDX "not quite Design" exam

Last week I was in London to complete the VCDX Design beta exam. This long awaited exam consists of a load of questions to be completed in four hours. In this blog post I will give my opinion on this exam. Because the contents of the exam should not be shared with others, I will not be giving any hints and tips on how to maximize your score if you participate, but I will address the kind of questions and my expectations in this blog post.

First off, there was way to little time to complete the exam. I have been trying to type comments on some questions with obvious errors or questions where I suspected something wasn’t quite right. All questions require some reading, so I could nicely time if I needed to speed up or down. Unfortunately, somewhere near question 100, I stumbled upon a question with pages worth of reading! So I had to “hurry” that one, and as a result, the rest as well. Shame. Also, VMware misses out vital information this way because people just don’t have the time to comment.

As I have noticed with other VMware exams, the scenarios are never anywhere near realism (at least not for European standards). Also having questions about for example the bandwidth of a T1 line is not very bright, given the fact that the exam is to be held worldwide. In Europe, we have no clue on what a T1 line is.

But the REAL problem with this design exam is, that in my opinion, this is NO design exam at all. Sorry VMware, I was very disappointed. If this were a real design exam, I would actually encourage people to bring all PDFs and books they can find; it would (should!) not help you. Questions like “how to change a defective HBA inside an ESX node without downtime” ? Sorry, nice for the enterprise exam, but it has absolutely nothing to do with designing. Offload that kind of stuff to the enterprise exam please! And if you have to ask about things like this, then ask about how to go about the rezoning of the fiber switches. That would at least prove of some understanding how you design a FC network. But that question was lacking.

There are numerous other examples of this, all about just knowing that little tiny detail to get you a passing score. That is not designing! I had been hoping for questions like how many spindles to design under VMware and why. When to use SATA and when not to. Customers having blades with only two uplinks. Things that actually happen in reality, things that bring out the designer in you (or should bring out the designer in you)! Designing is not knowing about what action will force you to shut a VM and which action will not. <Sigh>.

I know, the answers could not be A, B, C or D. This exam should have open questions. More work for VMware, but hey, that is life. Have people pick up their pen and write it down! Give them space for creativity, avoiding the pitfalls that were sneaked into the scenario. These things are what should be a quality of a designer anyway. That’s the way to get it tested.

I’ll just keep hoping the final part of the VCDX certification (defending a design for a panel) will finally bring that out. If it doesn’t, we’ll end up with just another “VCP++” exam, for which everyone can get a passing score if you study for a day or two. I hope VCDX will not become “that kind of a certification”!

I hope VMware will look at comments like these in a positive manner, and create an exam which can actually be called a DESIGN exam. VMware, Please PLEASE put all the little knowledge tidbits into the Enterprise exam, and create a design exam that actually forces people to DESIGN! Until that time, I’ll be hoping the final stage of VCDX will give back my hopes that this certification will really make a difference.

VMware HA, slotsizes and constraints – by Erik Zandboer

There is always a lot of talk about VMware HA, and how it works with slotsizes and determines host failover using the slotsizes. But nobody seems to know exactly how this is done inside VMware. By running a few tests (on VMware ESX 3.5U3 build 143128), I was hoping to unravel how this works.

Why do we need slotsizes

In order to be able to guess how many VMs can be run on how many hosts, VMware HA does a series of simple calculations to “guestimate” the possible workload given a number of ESX hosts in a VMware cluster. Using these slotsizes, HA determines if more VMs can be started on a host in case of failure of one (or more) other hosts in the cluster (only applicable if HA runs in the “Prevent VMs from being powered on if they violate constraints”), and how big the failover capacity is (failover capacity is basically how many ESX hosts in a cluster can fail while maintaining performance on all running and restarted VMs).

Slotsizes and how they are calculated

The slotsize is basically the size of a default VM, in terms of used memory and CPU. You could think of a thousand smart algorithms in order to determine a worst-case, best-case or somewhere-in-between slot size for any given environment. VMware HA however does some pretty basic calculations on this. As far as I have figured it out, here it comes:

Looking through all running (!!) VMs, find the VM with the highest cpu reservation, and find the VM with the highest memory reservation (actually reservation+memory overhead of the VM). These worst-case numbers are used to determine the HA slotsize.

A special case in this calculation is when reservations are set to zero for some or all VMs. In this case, HA uses its default settings for such a VM: 256MB of memory and 256MHz of processing power. You can actually change these defaults, by specifying these variables in the HA advanced settings:

das.vmCpuMinMHz

das.vmMemoryMinMB

In my testing environment, I had no reservations set, not specified these variables, and did not have any host fail-over (Failover capacity= 0). As soon as I introduced these variables, both set at 128, my failover level was instantaneously increased to 1. When I started to add reservations, I was done quite quickly: adding a reservation of 200MB to a single running VM, changed my failover level back to 0. So yes, my environment proves to be a little “well filled” 😉

Failover capacity

Now we have determined the slotsize, the next question which arises, is: How does VMware calculate the current failover capacity? This calculation is also pretty basic (in contrast to the thousand interesting calculations you could think of): Basically VMware HA uses the ESX host with the least resources, calculates the number of slots that would fit into that particular host, then determines the number of slots per ESX host (which are also projected to any larger hosts in the ESX cluster!). What?? Exactly: using ESX hosts in a HA enabled cluster which do have different sizes for memory and/or processing power impacts the failover level!

In effect, these calculations are done for both memory and CPU. Again, the worst-case value is used as the number of slots you can put on any ESX host in the cluster.

After both values are known (slotsize and number of slots per ESX host), it is a simple task to calculate the failover level: Take the sum of all resources, divide them by the slotsize resources, and this will give you the number of slots available to the environment. Subtract the number of running VMs from the available slots, and presto, you have the number of slots left. Now divide this number by the number of slots per host, and you end up with the current failover level. Simple as that!

Example

Lets say we have a testing environment (just like mine 😉 ), with two ESX nodes in a HA-enabled cluster, configured with 512MB of SC memory. Each has a 2GHz dualcore CPU and 4GB of memory. On this cluster, we have 12 VMs running, with no reservations set anywhere. All VMs are Windows 2003 std 32 bit, which gives a worst-case memory overhead of (in this case) 96Mb.

At first, we have no reservations set, and no variables set. So the slotsize is calculated as 256MHz / 256MByte. As both hosts are equal, I can use any of the hosts for the number of slots per hosts calculation:

CPU –> 2000MHz x 2 (dualcore) = 4000 MHz / 256 MHz = 15,6 = 15 slots per host

MEM –> (4000-512) Mbytes / (256+96) Mbytes = 9,9 = 9 slots per host

So in this scenario 9 slots are available per host, so in my case 9 slots x 2 host = 18 slots for the total environment. I am running 12 VMs, so 18 – 12 = 6 slots left. 6/9 = 0,6 hosts left for handling failovers. Shame, as you can see I need 0,4 hosts extra to have any failover capacity.

Now in order to change the stakes, I put in the two variables, specifying CPU at 300MHz, and memory at 155Mbytes (of course I just “happened” to use exactly these numbers in order to get both CPU and memory “just pass” the HA-test):

das.vmCpuMinMHz = 300

das.vmMemoryMinMB = 155

Since I have no reservations set on any VMs, these are also the highest values to use for slotsizes. Now we get another situation:

CPU –> 2000MHz x 2 (dualcore) = 4000 MHz / 300 MHz = 13.3 = 13 slots per host

MEM –> (4000-512) Mbytes / (155+96) Mbytes = 13.9 = 13 slots per host

So now 13 slots are available per host. You can imagine where this is going when using 12 VMs… In my case 13 slots x 2 host = 26 slots for the total environment. I am running 12 VMs, so 26 – 12 = 14 slots left. 14/13 = 1,07 hosts left for handling failovers. Yes! I just upgraded my environment to a current failover level of 1!

Finally, Lets look at a situation where I would upgrade one of the hosts to 8GB. Yep you guessed right, the smaller host will still force its values into the calculations, so basically nothing would change. This is where the calculations go wrong, or even seriously wrong: Assume you have a cluster of 10ESX nodes, all big and strong, but you add a single ESX host having only a single dualcore CPU and 4GB of memory. Indeed, this would impose a very small number of slots per ESX hosts on the cluster. So there you have it: yet another reason to always keep all ESX hosts in a cluster equal in sizing!

Looking at these calculations, I actually was expecting the tilt point to be at 12 slots per host (because I have 12 VMs active), not 13. I might have left out some values of smaller influence somewhere, like used system memory on the host (host configuration… Memory view). Also, the Service Console might count as a VM?!? Or maybe VMware just likes to keep “one free” before stating that yet another host may fail… This is how far I got, maybe I’ll be able to ad more detail as I test more. Therefore the calculations shown here may not be dead-on, but at least precise enough for any “real life situation” estimates.

So what is “Configured failover”?

This setting is related to what we have just calculated. You must specify this number, but what does it do?

As seen above, VMware HA calculates the current host failover capacity, which is a calculation based on resources in the ESX hosts, the number of running VMs and the resource settings on those VMs.

Now, the configured failover capacity determines how many host failures you are willing to tolerate. You can imagine that if you have a cluster of 10 ESX hosts, and a failover capacity of one, the environment will basically come to a complete halt if five ESX nodes fail at the same time (assuming all downed VMs have to be restarted on the remaining five). In order to make sure this does not happen, you have to configure a maximum number of ESX hosts that may fail. If more hosts fail than the specified number, HA will not kick in. So, it is basically a sort of self-preservation of the ESX cluster.

hostd-hara-kiri – by Erik Zandboer

Today I got a question of a customer – His hosts appeared to reboot every few hours, or at least show up grey in vCenter. I found the issue – A clear case of hostd-hara-kiri…!

When I heard of this issue, the first and only thing that came to mind was hostd running out of memory. A quick look at /var/log/vmware/hostd.log showed the issue: “Memory checker: Current value 174936 exceeds soft limit 122880“. I advised to raise the service console memory, although I am not sure this resolves the issue, since the limits for hostd memory are not changed when you alter the SC memory… So as a “backup” I told him to make the changes stated below in order to at least make sure the problem would not come back.

Anyway, I decided to check out my testing environment. I too had the hostd.log being filled up with these messages. The soft limit is almost constantly broken, which is set by VMware at 122880. The hard limit is set at 204800. Hard limit??? So what happens when the hard limit is reached? – Exactly, hostd-hara-kiri.

One of the ESX servers I looked at, showed a value of 204660, geez it must be my “lucky” day! I exported the hostd.log, imported it in Excel, and managed to get out this graph:

Here you see the hostd memory usage climbing to its summit: hostd-hara-kiri.

(Not so) reassured by the outcome of the graph and it linear behaviour, I started to tail the hostd.log. Man, this is more exciting than watching a horror movie 😉 ! After a short while, the inevitable happened: “Current value 204828 exceeds hard limit 204800. Shutting down process.” KA-BOOOM! Hostd was gone, the host fell grey for about 30 seconds in vCenter, then came back up as if nothing had happened. And they say there is no such thing as reincarnation! I think a lot of people must have witnessed this, thought it to be “odd”, and went on with their lives.

In fact, after looking at one of my ESX test hosts through all hostd logging I could lay my hands on (they rotate quite fast because of these once-every-30-second events), I put together this graph. Lucky me, I managed to capture a controlled reboot and a hostd-hara-kiri event:

Hostd memory climbing, going down because of host reboot, then a climbing again followed by a plummit = host-hara-kiri

As shown in the graph, a full circle from controlled reboot to hara-kiri appears to be somewhere around every 6000 samples for this particular host. A warning appears every 30 seconds, and I have removed every sample except the 10th one. So this sets the hara-kiri-frequency at about (6000*10*30 = 1.800.000 seconds, or 20.8 days. Not being very happy with these results, I decided to try and avoid this repeating “reincarnation event”. And I was soon to find a workaround (not sure if this is the solution), by editing /etc/vmware/hostd/config.xml. I added these lines right below <config>:

<hostdWarnMemInMB>200</hostdWarnMemInMB>

<hostdStopMemInMB>250</hostdStopMemInMB>

This basically sets the limits to a higher value. The warnings will now appear where it used to be hostd-hara-kiri time, and the true hara-kiri threshold is raised from 200MB to 250MB. This at least delays the problem of hostd reincarnation, but I am unsure about the true cause at this time. It appears to have something to do with stuff installed inside the service console of ESX: Servers having for example HP agents installed appear to use more hostd memory than “clean” service consoles, and these reincarnation events can occur in hours instead of days. That, and the linear climbing of used memory pleads for…. Memory leak. I expect VMWare has a bug to fix. Might be a nasty one too, I believe it has been inside ESX for a long time (maybe even 3.5U2 or before).

So: If you have intermitting reboots or at least disconnects from vCenter, check the hostd log for these limit-warnings.

Ye Olde Snapshot – by Erik Zandboer

A lot of people have had more or less unpleasant experiences with forgotten snapshots. You login in the morning, and a VM is down. “Strange” you think. After some investigation, you find out the VMFS volume on which the VM was running is full. Completely full. And to your horror you find out why – A forgotten snapshot is in place which has now grown beyond the size of the VMFS volume.

What exactly does a snapshot do

First thing to understand, is how a snapshot exactly works. When you add a snapshot, the original virtual disk is no longer written to. Each block that should be written into this file, is redirected to a snapshot file. So basically this snapshot file holds all changes made to the virtual disk after the snapshot was made. The more changes you make to blocks not changed before, the larger the snapshot file will grow (in steps of 16MB). Each changed block is stored inside the snapshot file only once. This means that a snapshot file can reach a sometimes staggering size equal or almost equal to the size of the original virtual disk (defragmentation inside a VM is my personal favorite 😉 ).

Monstrous snapshot – now what?

If you “forget” about a snapshot, changes are you will never notice this, right until it might be too late. Especially if you snapshotted a very large virtual disk, and have plenty of room left on the VMFS, snapshots can grow to immense sizes. Cleaning them up can be very time consuming indeed.

If you have found a very old snapshot file which has grown very large (eg. 10-40GB), you can actually delete the snapshot without problems, thereby committing all changes recorded in the snapshot file back to the original disk. So you end up with only the virtual disk as it appeared when the snapshot was in place, only without the snapshot there. But beware – If you delete the snapshot from vCenter (got to get used to that name instead of VirtualCenter), you might very well get a timeout. This has given some people some really sweaty fingers. Don’t panic, login to the ESX node itself, and you’ll probably see that the snapshot is still being removed. It might take an hour, it might take four hours, but in time the snapshot should remove itself.

VMFS full – How to get the VM running again

If a forgotten snapshot fills up the entire VMFS, you might run out of VMFS space. chances are that your snapshotted VM stops. This is because the VM is trying to write to its disk, and the snapshot needs to grow but it can’t. There are two ways to resolve this: 1) make room on the VMFS, or 2) delete the snapshot while the VM remains off. In a production environment, option 2) might not work for you. Deletion of large snapshots might take hours. So we’re back to making room on the VMFS. Maybe you can or move another VM from the VMFS. Maybe you have some ISOs laying about the VMFS you can delete. Then you can start your troubled VM again, and remove the snapshot while the VM is running again. A last resort might even be to give the VM less memory, or put its swapfile in another location (possible in ESX 3.5u3). Then start to delete the snapshot right away, before it manages to fill up the VMFS again.

I have even heard of people who put a 2GB dummy file on each VMFS volume, so that when it comes to these issues they just delete the file – and gain 2 Gbytes of space. If forgetting snapshots is your habit, you might consider this as a “best practice” for your environment…

50GB+ snapshot – Delete or…?

What if you have a really big snapshot (and I mean 50+ GB), or you might even have multiple huge snapshots in place? Or even have snapshots that appear to be garbled in their linkage (horrors like “cannot delete snapshot because the base disk was modified after the snapshot was taken”). You might not want to risk deletion of these snapshot(s). There is another way to recover safely, especially if you run Windows 2003 or later, which should be much more advertised: VMware Converter! It is really a magical tool. Not only for P2V, but also in cases exactly like this. While you keep your VM running, just point Converter to the VM while telling Converter it is a physical machine. Converter will install its agent inside the VM, and start to duplicate your VM to another LUN. After the conversion, the target VM will be free of any snapshots!

This option also works great if you have issues with your SAN. I have seen environments that had LUNs you could not even browse through any more (not from the datastore browser nor via ssh) – but VMs placed there were still running OK. It shows stability and enterprise-readyness of ESX for sure, but how to recover? Even restarting the VM or scanning LUNs is risky here. The simple answer was: Use Converter. Simply use Converter! To make a short story even shorter: converter saved the day 🙂

So I guess as a final word I should say: For VM recovery from even the weirdest disk-related issues, consider to use VMware Converter !

The temptation of "Quantum-Entangling" Virtual Machines – by Erik Zandboer

More and more vendors of SANs and NASses are starting to add synchronous replication to their storage devices – some are even able to deliver the same data locally on different sites using nfs. This sounds great, but more and more people tend to use VMware clusters across sites – and that is where it goes wrong: VMs run here, using storage there. It all becomes “quantum entangled”, leaving you nowhere when disaster strikes.

These storage offerings are causing people to translate this into creating a single VMware HA-cluster across sites. And really- I cannot blame them. It all sounds too good to be true: “If an ESX node at site A fails, the VMs are automagically started on an ESX server at another site. Better yet, you can actually VMotion VMs from site A to site B and vice versa.” Who would not want this?

VMware thinks differently – and with reason. They state that a VMware cluster is meant for failover/load balancing between LOCAL ESX nodes, and failover is a whole other ballgame (where Site Recovery Manager or SRM comes in). This decision was not made for no reason as I will try to explain.

How you should not do DR

If you have one big single storage array across sites, you could run VMs on either side, using whatever storage is local to that VM. That way, you do not have your disk access from VM to storage over the WAN. But when DRS kicks in, the VMs will start to migrate between ESX nodes – and between sites! And that is where it goes wrong, the VMs and their respective storage will get “entangled”. I like to call that “quantum-entanglement of VMs”, because it is kind of alike, and of course, because I can 🙂

Even without DRS, but with manual VMotions, in time you will definitely loose track on which VM runs where, and more import: use storage from where. In the end 50% of your VMs might be using storage on the other site, loading the WAN with disk I/O and introducing the WANs latency to the disk I/O of the VMs that have become “stretched”.

All this is pretty bad, but let’s say something really bad happens: your datacenter at one location is flooded, and management decides you have to perform a failover to the other site. Now panic strikes: There is probably no Disaster Recovery plan, and even if there is, it is probably way off from being actually useable. VMs have VMotioned to the other site, storage has been added from either side. VMs have been created somewhere, using storage somewhere and possibly everywhere. In other words: You have no idea where to begin, let alone being able to automate or test a failover.

VMware’s way of doing DR

In order to be able to overcome the problems with this “entanglement”, VMware defines a few clear design limitations as to how you should setup DR failover, with SRM helping out if you choose to. But even without SRM, it is still a very good way of designing DR.

VMware states, that you should keep a VMware cluster within a single site. DRS and HA will then take care of the “smaller disasters” such as NICs going down, ESX nodes failing, basically all events that are not to be seen as a total disaster. These failovers are automatic, they correct without any human intervention.

The other site should be totally separated (from a storage point of view). The only connection between the storages on both sides should be a replication connection. So both sites are completely holding their own as far as storage is concerned. Out of scope of this blog, yet VERY important: When you decide on using asynchronous replication, make sure your storage devices can guarantee data integrity across both sites! A lot of vendors “just copy blocks” from one site to the other. Failure of one site during this block copy can (and will) lead to data corruption. For example, EMC storage creates a snapshot just before an asynchronous replication starts, and can revert to that snapshot in case of real problems. Once again, make sure your SAN supports this (or use synchronous replication).

Now let’s say disaster strikes. One site is flooded. HA and DRS are not able to keep up, serves go down. This is beyond what the environment should be allowed to “fix” by itself – So management decides to go for a failover. Using SRM, it should only take the press of a button, some patience (and coffee); but even without SRM you will know exactly what to do: Make replicated data visible (read/write) on the other site, browse for any VMs on them, register, and start. Even without any DR-plan in place, it is still doable!

Where to leave your DR capacity: 50-50 or 100-0?

So let’s assume you went for the “right” solution. Next to decide will be, what you are going to run where. Having a DR site, it would make sense to run all VMs (or at least almost all VMs) on the primary site, and leave the DR site dormant. Even better, if your company structure allows it, run test and development at the DR site. In case of a major disaster you can failover production to the DR site, and loosing only test and development (if that is allowable).

The problem often is your manager: He paid a lot of money for the second SAN, and DR ESX nodes. Now you will have to explain that these will do absolutely nothing as long as no disaster takes place. Technically there is no difference: You either run both sites at 50%, or one on 100% and the other dormant at 0%. Politically it is much more difficult to sell.

If you use SRM, there is a clear business case: If you run at 50-50, SRM needs double the licenses. And SRM is not cheap. Without SRM, it takes more explanation, but in my opinion running at 100-0 is still the way to go. As an added bonus, you might use less ESX nodes on the DR site if you do not have to failover the full production environment (which will reduce cost without SRM as well).

Conclusion

–> Don’t ever be tempted to quantum-entangle your VMs and their storage!

Performance tab "Numbers" demystified – by Erik Zandboer

“CPU ready time? Always use esxtop! The performance tab stinks!” is what I hear all the time. But in reality, they don’t stink, they’re just misunderstood. This edition of my blog will try to clarify this using the famous CPU ready times as an example.

A lot of people have questions about items like CPU ready times. Advice is usually to run esxtop. I have always been a fan of the performance tabs instead. However, the numbers presented there are very often unclear to people. The same goes for disk IOps. In fact any measured value with a “number” as the unit appears to suffer from this. No need – The numbers are valid, you just should know how to interpret them. Added bonus is off course, you get an insight through time on your precious CPU ready times, instead of a quick look in esxtop. If you tune the VirtualCenter settings right, you can even see CPU ready times on your VM yesterday or the day before!

Comparing esxtop to performance in VI client

When you compare the values in both esxtop and the performance monitor, you could notice a clear difference in these values. While a VM has a %ready in esxtop of about 1.0%, in the performance graph (real-time view) it is all of a sudden a number around 200 ms?!? As strange as this sounds – the number is correct. The secret lies in the percentage versus the number of milliseconds. It is the same thing, but shown differently.

The magic word: sampletime

When you start to think of it: The number presented in the performance tab is in the unit of milliseconds. So basically it is the number of milliseconds the CPU has been “ready” as opposed to the percentage ready in esxtop. But how many milliseconds out of how much time you might wonder? That is where the magic word comes in – sampletime!

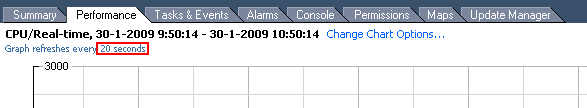

Sampletime is basically the time between two samples. As you might know, the sampletime in the real-time view of the performance tab is 20 seconds (check upper left of the window):

Sampletime - yes! it is 20 seconds

So VMware could have taken the percentage ready from esxtop, and display these instant values to form this graph. In reality however it is even nicer: VMware is able to measure the number of milliseconds ready-time of the CPU inbetween these two samples! Once you grasp this, it all becomes clear – So if you see in real-time view a value of 200 ms, in esxtop-like representation you would have 200/20 = 10 milliseconds per second, or 0.01 seconds per second, which is dead-on 1% (how DO I manage 😉 )

Changing the statistics level in VirtualCenter

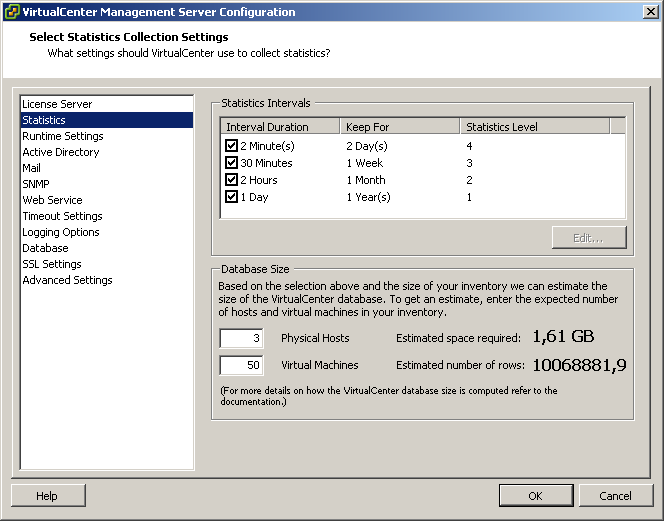

So now we have proven that the numbers can actually be matched – time for the nice bonus. If you login to VirtualCenter via the VI client, you can edit the settings of VirtualCenter by clicking on the “administration” drop-down menu, and selecting “VirtualCenter Management Server Configuration” (whoever came up with that monstrous name!). In this screen you’ll see an item called “statistics”. There you can tune a see more statistics over more time:

Altering statistics levels in VirtualCenter

In this example I have increased the level of statistics, and I have also changed the first interval duration to two minutes. In this setup, I can see CPU ready times of all my VMs for one entire week now:

CPU ready times for an entire week

Take care, your database will grow much larger, and VMware does not encourage you to keep this level of statistics on for an extended period of time – although I have been running these levels for months now without issues on a small (2 ESX server) environment. My database size is now around 1.4GB – Not alarming although the size grows exponentially with the number of ESX hosts you have.

When you look close into the graph (an almost idle webserver which runs virusscan at 5AM), you’ll notice that CPU ready times boost up to around 50.000 milliseconds. Sounds alarming, right? But do not forget, in this case I am looking at a weekly graph, which is sampling at a 30 minute sample rate. So I should do my calculation once again: 30 minutes = 30*60 = 1800 seconds (=sampletime). So 50000 / 1800 = 27.8 milliseconds per seconds, or 0.0278 seconds per second. So CPU ready is peaking up to just below 3%, which is acceptable (usually below 10% is considered ok). Not bad at all! But it would have been hard to find using esxtop though (I hate getting up early).

Any number

The calculations I have done are valid for all measured values that have a “numbers” unit within the Performance tab. So it also works for disk operations (like “Disk read requests”) and networking (like “Network packets received”).

So if you looking for esxtop output through time in a graph – Take a second look at your Performance tabs!

Scaling VMware hot-backups (using esXpress) – by Erik Zandboer

There are a lot of ways of making backups- When using VMware Infrastructure there are even more. In this blog, I will focus on so called “hot backups”- Backups made by snapshotting the VM in question (on an ESX level), and then copying the (now temporary read-only) virtual disk files off to the backup location. And especially, how to scale these backups into larger environments.

Say CHEESE!

Hot backups are created by first taking a snapshot. A snapshot is quite a nasty thing. First of all, each virtual disk that makes up a single VM have to be snapped at exactly the same time. Secondly, if at all possible, the VM should flush all pending writes to disk just before making this snapshot. Quiescing is supported in the VMware Tools (which should be inside your VM). Quiescing will flush all write buffers to disk. Effective to some extent, however not enough for database applications like SQL, Exchange or Active Directory. In those cases VSS was thought up by Microsoft. VSS will tell VSS enabled applications to flush every buffer to disk and hold any new writes for a while. Then the snapshot is made.

There is a lot of discussions about making these snapshots, and quiescing or using VSS. I will not get into that discussion, it is too much application related for my taste. For the sake of this blog, you just need to know it is there 🙂

Snapshot made – now what?

After a snapshot is made, the virtual disk files of the VM have become read-only accessible. It is time to make a copy. And this is where different backup vendors start to really do things different. As far as I have seen, there are several different ways of taking out these files:

Using VCB

VCB is VMware’s enabler for primarily making backups through a fibre-based SAN. VCB enables a “view” to the internals of a virtual disk (for NTFS), or give a view to an entire virtual disk file. From that point, any backup software can make a backup of these files. It is fast for a single backup stream, but requires a lot of local storage on the backup proxy, and does not easily scale up to a larger environment. The variation using the network as carrier is clearly a suboptimal solution compared to other network-based solutions.

Using the service console

This option installs a backup agent inside the service console, which takes care of the backup. Do not forget, an FTP server is also an agent in this situation. It is not a very fast option, especially since the service console network is “crippled” in performance. This scenario does not scale very well to larger environments.

Using VBAs

And here things get interesting – Please welcome esXpress. I like to call esXpress the “Software version” of VCB. Basically, what VCB does – make a snapshot and create a view to a backup proxy – is what esXpress does as well. The backup proxy is no hardware server though, or a single VM, but numerous tiny appliances, all running together on each and every ESX host in your cluster! You guessed it – see it and love it. I did anyways.

esXpress – What the h*ll?

The first time you see esXpress in action, you might think it is a pretty strange thing – First you install it inside the service console (you guessed right – There is no ESXi support yet). Second it creates and (re)configures tiny Virtual Appliances all by itself.

When you look closer and get used to these facts – It is an awesome solution. It scales very well, each ESX server you add to your environment starts acting as a multiheaded dragon, opening 2-8 (even up to 16) parallel backup streams out of your environment.

esXpress is also the only solution I have seen which does not have a single SPOF (Single Point of Failure). ESX host failure is countered by VMware HA, and the restarted VMs on other ESX servers are backup up from the remaining hosts. Failing backup targets are automatically failed over to by esXpress to other targets.

Setting up esXpress can seem a little complex – there are numerous option you can configure. You can get it to do virtually anything. Delta backups (changed blocks only), skipping of virtual disks, different scheduling of backups per VM, compression and encryption of the backups, and SO many more. Excellent!

Finally, esXpress has the ability to perform what is called “mass restores” or “simple replication”. This function will automatically restore any new backups found on the backup target(s) to other locations. YES! You can actually create a low-cost Disaster Recovery solution with esXpress – RPO (Recovery Point Objective) is not too small (about 4-24 hours), but the RTO (Recovery Time Objective) can be small, 5-30 minutes is easily accomplished.

The real stuff: Scaling esXpress backups

Being able to create backups is a nice feature for a backup product. But what about scaling to a larger environment? esXpress, unlike most other solutions, scales VERY well. Although esXpress is able to backup to VMFS, I will focus in this blog on backing up to the network, in this case FTP servers. Why? Because it scales easily! following makes the scaling so great:

- For every ESX host you add, you add more Virtual Backup Appliances (VBAs), so increases total backup bandwidth out of your ESX environment;

- Backup uses CPU from the ESX hosts. Especially because CPU is hardly an issue nowadays, it scales with usually no extra costs for source/proxy hardware;

- Backups are made through regular VM networks (not the service console network), so you can easily add more bandwidth out of the ESX hosts and bandwidth is not crippled by ESX;

- Because each ESX server runs multiple VBAs at the same time, you can balance the network load very well across physical uplinks, even when you use PORT-ID load balancing;

- More backup target bandwidth can be realized by adding network interfaces to the FTP server(s) when they (and your switches) support load-balancing through mulitple NICs (etherchannel/port aggregation);

- More backup target bandwidth can also be realized by adding more FTP targets (esXpress can load-balance VM backups across these FTP targets).

Even better stuff: Scaling backup targets using VMware ESXi server(s)

Although ESXi is not supported with esXpress, it can very well be leveraged as a “multiple FTP server” target. If you do not want to fiddle with load-balancing NICs on physical FTP targets, why not use the free ESXi to install serveral FTP servers as VMs inside one or more ESXi hosts! By adding NICs to the ESXi server it is very easy to establish load-balancing. Especially since each ESX host delivers 2-16 data streams, IP-hash load-balancing works very well in this scenario, and is readily available in ESXi.

Conclusion

If you want to make high performance full-image backups of your ESX environment, you should definitely consider the use of esXpress. In my opinion, the best way to go would be to:

- Use esXpress Pro or better (more VBAs, more bandwidth, delta backups, customizing per VM);

- Reserve some extra CPU power inside your ESX hosts (only applicable if you burn a lot of CPU cycles during your backup window);

- Reserve bandwidth in physical uplinks (use a backup-specific network);

- Backup to FTP targets for optium speed (faster than SMB/NFS/SSH);

- Place multiple FTP targets as VMs on (free) ESXi hosts;

- Use multiple uplinks from these ESXi hosts using loadbalancing mechanisms inside ESXi;

- Configure each VM to use a specific FTP target as its primary target. This may seem complex, but it guarantees that backups of a single VM always land on the same FTP target (better than selecting a different primary FTP target per ESX host);

- And finally… Use non-blocking switches in your backup LAN, which preferably support etherchannel/port aggregation.

If you design your backup environment like this, you are sure to get a very nice throughput! Any comments or inquiries for support on this item are most welcome.

LinkedIn

LinkedIn Twitter

Twitter