PHD Virtual Backup 5.1er – First Impressions

Today I got my hands on the new PHD Virtual Backup Appliance – version 5.1-ER. Following in the footsteps of its XenServer brother, this new version uses a single VBA (versus the previous versions where multiple VBA’s were used). Best of all: ESXi support at last!

Upgrading to the new version

This version is an Early Release version of the product. There does not seem to be an upgrade option from the 4.0-5-version dedup store. On the bright side, you actually CAN run both products (the older 4.0-5 and the brand new 5.1) next to each other. So in the real world one would probably hang on to the old setup (and disable scheduled backups) and start backing up using the new version. Once you have the required backup history on the new backup, you can safely discard or archive the old solution.

What has changed?

As said, the new version uses only a single VBA (Virtual Backup Appliance) instead of multiple smaller VBAs. You are however allowed to run multiple VBAs, as long as your license covers for it. This way you can freely tune performance and scale to whatever level you need to go. Best of all: As you install more VBAs, you don’t need to buy extra operating system licenses (like with most competitors).

Hot Add – Cold Add

With the release of the VMware vStorage APIs, a lot of very exciting things happened in the backup community. Two feastures stand out: Changed Block Tracking (CBT) and the hot-add feature. The hot-add feature is handy for adding to- and removing virtual disks from a running VM. This enables you to quickly mount virtual disks to a backup VM, without the need to shut and restart the VM. After the virtual disk has been hot-added to the VM, CBT can ensure you only backup the blocks that actually changed after the last successful backup. For all you tape lovers out there: It results in a sort of “incremental forever” scheme.

Every modern backup vendor pat themselves on the back for having hot-add. But PHD Virtual (or should I say esXpress as it wass called then) had the problem of “how to mount virtual disks to backup VMs” fixed YEARS ago. I like to call this “cold add”: By using one VM per backup stream, they just shut, reconfigged and restarted their backup VMs (called VBAs).

That smart way of using “cold-add” has been somewhat hurting the product lately: Because they still used cold-add, the work involved around making a backup was a big overhead: VBA’s had to be shut, started, configured, reconfigured, checked and checked again. They do a really good job at this, but with the coming of hot-add it was just starting to take too much time to hold on to “cold add”.

The new PHD Virtual Backup 5.1 also uses hot-add. This simplifies making backups, and more important: it reduces the overhead around the making of the actual backups radically. Also, a VBA no longer has to be limited to a single backup stream: The new VBA can handle up to four backup streams in parallel. Not enough for you? Then simply add another VBA and you gain four more.

Installing the new PHD Virtual 5.1

If you were a previous user of PHD Virtual Backup (or esXpress), you’ll know the setup of the product has not alwyas been that easy. The product used to be the direct result of some very smart people. That’s the good part. The bad part was that it showed: In the early versions, you needed quite a lot of knowledge to set it up and configure it. This setting up got better and better. Right up to the point of version 5.1: WOW.

Setting up the backup never was THAT easy. Basically there are two things to be done:

1) Install an MSI on your PC running the VI client;

2) Deploy and start an OVF appliance to your vSphere environment.

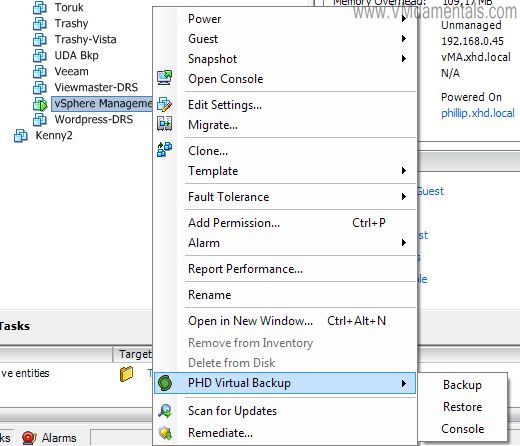

After you restart the VI client (if it was running) you gain a simple but owww so brilliant plugin for the VI client:

Before we can actually start making backups, we need to configure some settings within the VBA. Using the smart little plugin you select “Console” and then you hit the “configure” tab. The plugin opens its main window and configuration is surely easy enough:

In this case I used my IOmega IX2-200 (thanks Chad 🙂 ) as a backup target. NFS used to be THE backup target in the days of the PHD Virtual backup 4.0-5, but no more: Because the new VBA is only a single VBA with dedup incorporated into the VBA, there is nothing to stop you from selecting a virtual disk (vmdk) as your backup target – In fact, this seems to be the best performer (not tested this too much yet though). Very nice detail: The moment you add a virtual disk to the appliance, you can select “Attached virtual disk” as your backup target, and the rest is fully automagic: The disk is found, initialized and ready to go. Another Wow.

Making backups

Making a backup is very very easy. Click right on the VM you wish to backup, and select “backup”. Next-Next-Finish and your backup commences. If you want to start backing up multiple VMs on a scheduled basis: in version 5.1 there has been a complete rewrite of how you select to backup when. The selector box to determine what to backup is smart and easy to use:

Easy yet complete selector box to select what to backup

You can select all the VMs you want, and even better: Leave out the virtual disks you do not want. For example, most of my VMs have a separate disk for their swapfile, which is not included in the backup (TIP: this yields better dedup results!).

After selecting the virtual disks, you can set some options to the backup job, and you can also select when the backups should run. The best part: You can have multiple backup jobs, and they will all use the one single dedup store, which increases effectiveness. I have to do more testing on the use of multiple VBAs to a single NFS store 😛

Even more important – doing Restores

So the backup side of things is cool. But what about restores? To no surprise, this is also covered well. You can perform full restores through a restore job. No scheduled restores though, meaning: no replication like version 4.0 was able to perform. Also, you restore to a different VM (seems not possible “over” an existing VM), meaning a new MAC address as well. You can either right click on a VM and select “restore”, or you can use the job tab in the plugin to build your own restore job.

Most backup vendors (if not all) have some way of restoring files from a virtual disk on a file level. This was already available in PHD Virtual Backup 4.0, but has changed in 5.1: Instead of selecting files using the web interface of the dedup appliance (and then saving as a zip file), the plugin now features a fully functional (and really fast!) iSCSI mounting option! When you select to file restore a backup, you can mount the backup to an iSCSI target, and presto! You have a network drive on your PC from which you can directly access all the files within the virtual disk backup! This is even better than in the 4.0 version.

The third way of reaching out to your data, is the CIFS share to the VBA. Through this share you can view/read the virtual disks directly, which are “rehydrated”. This basically means you de-dedupe your virtual disks, giving a view to your non-deduped virtual disks directly. Again, for all tape lovers 🙂

If PHD Virtual were to make those rehydrated virtual disks available through iSCSI as well (or NFS), then you could mount your backups directly to ESX, and possibly even run your VMs directly from the backup. In that case, storage VMotion could replace your restore (restore while your VM is already up!). Veeam is working on just that by the way 🙂

Dedup – anything new?

Not too much new here. It appears the same strategy is still used within the 5.1 product. Don’t get me wrong, the dedup implementation already was very very solid, so I can imagine not too much has changed here. It seems the VBA is still able to verify written blocks offline (read: after the backup has been made), and invalidate backups with failed blocks. The cool part: This dedup implementation is able to repair failed backups by rewriting the failed blocks if they’re found in new backups. So a new backup can actually fix and old one!

The new iSCSI view on the files within the dedup store (see above) is a very good step into file level restores.

So what have we lost?

The new version 5.1 of PHD Virtual Backups is really very different from the older versions. It is less complex, less things to configure. As nice as that is for new/smaller users, the question arises: What features have we lost? Some of them not to be found in the ER version (although they might be in the GA version!):

1) New VMs can no longer be automagically included into the backup job, although you can just select to backup all VMs in a resource pool or folder which effectively replaces this;

2) Replication? Not there (at least not in the Early Release version);

3) Non-dedup backups? No more;

4) Backup target failover? Should think more on that, but it appears not to be there (in dedup mode not really what you want anyway though);

5) No more per-VM configuration. Cool thing is I have not really had the need anymore in this version 🙂

So basically, not too much missing things that have not been replaced by something else.

So what have we gained?

Having had the bad points, now for some good points:

1) Setup is the easiest yet;

2) Integration with the VI client is great;

3) Scheduling is easier and more flexible;

4) Performance is great, especially once CBT settles;

5) File level restore through the iSCSI target is GREAT.

Feature requests?

Finally, feature requests. What I would like:

1) Really just one: Be able to mount a backup via NFS to an ESX node, and be able to start the backup directly from the dedup store. Then use Storage VMotion to restore the VM while it is already running. Would’t that be cool!

LinkedIn

LinkedIn Twitter

Twitter

[…] This post was mentioned on Twitter by Eric Sloof, Erik Zandboer. Erik Zandboer said: New blog entry: Today I got my hands on the early release version of PHD Virtual Backup 5.1 (formerly esXpress). A fir…http://lnkd.in/mj8CGy […]

“1) Really just one: Be able to mount a backup via NFS to an ESX node, and be able to start the backup directly from the dedup store. Then use Storage VMotion to restore the VM while it is already running. Would’t that be cool!”

Instant Recovery with vPower by Veeam 😉

The vSphere client integration looks cool though.

Indeed 🙂

Veeam Backup 5 is the next on my target list 🙂

“If PHD Virtual were to make those rehydrated virtual disks available through iSCSI as well (or NFS), then you could mount your backups directly to ESX, and possibly ***even run your VMs directly from the backup***.”

But then they would infringe Veeam patent, of course. Veeam announced they have patented this approach back in the beginning of this year.

“Veeam is working on just that by the way”

Veeam was the one who actually invented this. And they released exactly this functionality last month.

Anvi, I know. Just kicking the guys at PHD to build this as well… Competition pushes features, and features I like 🙂

Veeam is next on the target list for a “first impressions” review.

Erik,

Thanks for the extensive review and positive remarks. The team here at PHD Virtual is very excited (and proud) to get this new release out to the market. It contains significant architectural improvements that result in a product that is much easier to install and use, has improved performance and scalability, and provides a better platform for continued feature enhancements. Our roadmap addresses the items you’ve noted and more. As you noted, one of the great benefits of the PHD Virtual products has always been that you don’t need to add a physical server to the environment to manage your backups – that remains the case with 5.1.

Jim Schrand

Dir of Product Management, PHD Virtual

[…] Erik Zandboer has already posted his first impressions of 5.1 here: http://www.vmdamentals.com/?p=1025 […]

I thought it was going to be some boring old post, but it really compensated for my time. I will post a link to this page on my blog. I am sure my visitors will find that very useful

[…] PHD5 and Veeam5 use backup jobs for automating backups, just like “regular” backup software. In Veeam5, you can run any job by rightclicking it and selecting “start”. In PHD5, you can use the plugin to right-click on a VM in the VI client, and selecting “backup from the plugins entry in the list. See one of my previous blogposts for some screenshots on that: PHD Virtual Backup 5.1er – First Impressions […]