Throughput part 3: Data alignment

A lot of people have discovered yet another excuse why their environment is not quite performing as it should: misalignment. Ever since a VMware document stated misalignment could potentially cost you up to 60% of performance, it has become an excuse. When looking closer, the impact is often nearly negligible, but sometimes substantial. Why is this?

Introduction

It is more and more seen in VMware environments today. “You should have aligned the partition. No wonder performance is bad”. But what is misalignment exactly, and is it really that devastating in a normal environment? The basic understanding of misalignment is rather simple. In RAID arrays, there is a certain segment size (see Throughput part 2: RAID types and segment sizes). This means data is striped across all members of a raid volume (a set of disks strung together to perform as one big unity). Especially when performing random I/O (and most VMware environments do), you want only a single disk to have to perform a track seek in order to get a block of data. So if your segment size on disk is 64KB, and you read a block of 64KB, only one disk has to seek for the data. That is, IF you aligned your data. If somewhere in between the data is not aligned with the segments on disk, you’d possibly have to read two segments, because each segment carries part of the block to be read (or written for that matter). Exactly that is called misalignment.

In most VMware environments, there are two “layers” between your VM data and the segments on disk: the VMFS and the file system inside your virtual disk. Since ESX 3.x, VMware delivers 64KB alignment of the VMFS. As soon as the blocks vSphere is accessing get bigger than 64KB, you could call it sequential access, where alignment does not help anymore. So basically the start of a VMFS block of 64KB, is always aligned to a 64KB segment on the disks laying underneath. For those who might wonder: VMFS block sizes (1MB … 8MB) are not related to the I/O sizes used on disk; VMFS is able to perform I/O on subsets of these blocks.

The second “layer” is more problematic: The guest file system. Especially NTFS under Windows 2003 server (or earlier) or desktop releases prior to Windows 7, NTFS will by default misalign. I have never understood why, but a default NTFS will align itself to 32256 bytes, or 63 sectors. After that the actual data starts. Getting NTFS aligned is simple: just create a gap after sector 63 right up to sector 128 (or any power of two above for that matter). This is easily done for new virtual disks, but not so easy for existing ones (especially system disks).

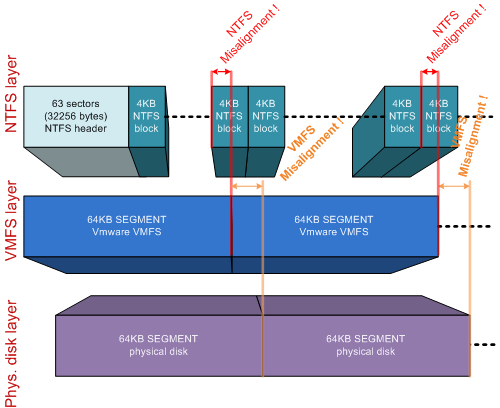

Misalignment shown graphically

A lot of people find misalignment hard to understand. A picture says a thousand words, so in order to keep this blog post somewhat shorter: pictures!

In figure 1, both VMFS and NTFS have been properly aligned, including some alignment space. In effect, for every block accessed from or to the NTFS file system, only one block on the underlying storage is touched. Thumbs up!

A misalignment of both VMFS and NTFS is depicted in figure 2. This is a really undesirable situation. As you can see, the access of an NTFS block will require one VMFS segment to be read, sometimes even two (due to NTFS misalignment). But since VMFS is misaligned to the disk segments, every 64KB VMFS block in this example will require the access to two segments on disk. This can and will hurt performance. Luckily, VMware spotted this problem relatively early, and from ESX 3.0 and up VMFS alignment is automagically if you format the VMFS from the VI client.

Figure 3 shows the situation I mostly see in the field. VMFS is aligned (because VMFSses formatted in the VI client align automagically to a 64KB boundary). NTFS is misaligned in this example. I see this all the time in Windows 2003 / Windows XP VMs. As you can see in this example, most blocks touch only a single segment on the physical disk. Some NTFS blocks “fall over the edge” of a 64K segment on disk. Any action performed on those NTFS blocks will result in the reading or writing of TWO segments on the underlying disks. This is the performance impact right there.

You can probably see where this is going: If your segment size on your storage is way bigger than the block size of your VM file system, impact is not too much of a problem. In the example in figure 2, two NTFS blocks out of every 64 blocks will be impacted by this, and only for random access (in sequential accesses your storage cache will fix your problem since both segments on disk will be read anyway). This is an impact of 1/32th, or 3,1%. You could possibly live with that…

Now let’s up the stakes. What if your storage array used a really small segment size on physical disk, let’s say 4KB? Take a look at figure 4:

vSphere will generate I/O blocks which get sized to the highest effectiveness. For example, if you have a database which uses 4KB blocks, and performs 100% random I/O, you get a situation like in figure 4. Every time you access a 4KB block, VMFS interprets this to a 4KB I/O action to your array. Because the NTFS / database blocks are misaligned, EACH access to a 4K block ends up on TWO disk segments. This impacts performance dramatically (up to 50% if all I/O sizes are 4KB). A similar situation occurs when your database application would use 8KB blocks; in that case for every I/O three segments on disk would be accessed instead of two, impacting performance of the disk set by 33% (if all I/O sizes are 8KB).

Why ever use a small segment size?

When you look at an EMC SAN (Clariion), the segment size is fixed at 64KB . When you look at a NetApp, segment size is fixed at 4KB. It would be pretty safe to say, that the impact of misalignment will hit harder on a NetApp than on an EMC box. That is probably why NetApp hammers so hard on alignment; in a NetApp environment it really does matter, in an EMC environment, a little less.

Looking at it the other way round: Why would you ever use such a small segment size? Why not use a segment size of for example 256KB, and feast on having only 1/128th or 0,78% impact when not aligning? Well, using a large segment size appears to be the solution to misalignment. And in a way, it is. But do not forget: Every time you need to access 4KB of data, 256KB is accessed on disk. So both yes AND no, a large segment size makes alignment almost a waste of time, but it introduces other problems.

Somewhere, the “perfect segmentsize” should exist. Best of both worlds… The problem is… This perfect segment size will vary with the type of load you feed to your SAN. EMC is sure about their 64KB (since it cannot be altered), NetApp seems sure about 4KB, because of the very same reason. The el-cheapo parallel-SCSI array (yes parallel SCSI indeed and vmotion works- but that is another story) I use for my home lab does a more generic job: For each RAID volume, I am allowed to choose my segment size (called a stripe size there). Now THAT gives room for tuning! And room for failure in tuning it at the same time…

Dedup and misalignment

Now that deduplication is the new hype, misalignment is said to impact dedup effectiveness. The answer to this, as usual, is…. It depends. If you take two misaligned windows 2003 servers from a template, you’d deduplicate them very effectively since they are very alike. If you were to align one of them (leaving the second one misaligned), dedup would possibly not find a single block in common. Makes sense right? Your alignment shifted all data within the VMDK, differentiating all blocks in effect. If I now align the second VM as well (using the same alignment boundary), dedup would once again be able to work effectively.

So the final answer should be: If dedup is to be effective, either align ALL VMs, or align NONE.

How to get rid of misalignment

Let’s say you’ve found that your VMs are misaligned. If things are really bad they are situated on a RAID volume with a very small segment size. Alignment could save the day. So how do you go about it? Several solutions I’ve come across:

- Manually;

- GParted utility;

- Use Vizioncore’s vOptimizer;

- If you’re a NetApp customer and use ESX (not ESXi), use their alignment tool mbrscan/mbralign;

- V2V your VMs using Platespin PowerConvert and align them on the way.

Manual Alignment is perfect for data drives. The idea is that you add a second data drive, create an aligned partition there using diskpart:

- Open a command promp, run diskpart;

- list disk – then select disk x;

- list volume – then select volume x;

- create partition primary align=64 (or any power of 2 above).

after that, stop whatever service is using your datadrive, copy all data, change the drive letters so your new aligned disk matches the old data drive, restart your services, remove the original data disk from the VM. This works great for SQL, Exchange, fileservers etc. The big downside: You cannot align system disks using diskpart (not even from another VM; diskpart’s create partition is destructive).

GParted is a utility that is said to align your partition if you resize the partition using this tool. Never looked into it, but it’s worth checking it out.

Vizioncore’s vOptimizer is a very nice tool that performs alignment for you. Basically it shuts the VM in question, and starts to move every block inside your VMDK(s). You end up with all disks aligned. The VM is then restarted and an NTFS disk check is forced. After that you’re good to go. It served me well on some occasions! You even get two alignments for free if you decide to give their product a spin.

NetApp customers get an alignment tool for free: mbralign. I never used this tool, but apparently it does about the same job as vOptimzer. It shuts your VM, aligns the disks, reboots your VM. It only works on ESX though (installs software in the Service Console).

If you cannot live with the downtime, but need to align anyway, you could consider to look at Platespin products. They can perform a “hot” V2V and align in the process. When data moving is complete, they fail over from the original VM to the newly V2Ved VM, syncing the final changes on the destination disk(s). You end up with an aligned copy of your VM with minimal downtime.

How to prevent misalignment in the first place

Misalignment is often seen, but not necessary at all if you think about it before you start: A lot of people create templates. Not too many align their templates… But you could! If you have a (misaligned or not) VM laying around, you could add an empty system disk of the template-to-be to it, and format the partition aligned from that “helper” VM (see the diskpart description above). Then detach the system disk from the helper VM again, and proceed to install Windows on the (now aligned) disk. Choose not to change anything to the partitioning and you are good to go. Bootable XP CD’s can also do the same trick here.

Now your template is aligned. The upshot: Any VM deployed from this template is too!

There is an easy way to check under windows if your disks are aligned. Simply run the msinfo32.exe from windows, expand components, storage, disks. Find the item “Partition Starting Offset”. If it reads 32.256, you’re out of luck: your partition is misaligned. If it reads 65536, you have a 64K aligned partition. If the value reads 1.048.576, the partition is aligned on a 1MB boundary (Windows 2008 / Windows 7 default).

Conclusion

Is alignment important? Well, it depends. It particularly depends on the segment size used within your storage array. The smaller the segment size, the more impact you have. Bottom line though: Alignment always helps! Get off to a good start and perform alignment right from the beginning and you’ll profit ever after. If you didn’t go off to a perfect start, consider aligning your VMs afterwards. Start with the heavy random I/O data disks for sure, but I would recommend to have the system disks aligned as well, using one of the described tools.

LinkedIn

LinkedIn Twitter

Twitter

[…] file system blocks match exactly with your storage block layout. There are some further information here (esp. for running VMWARE with any operating system) or this great article […]

what do you think should be allignment design or preference in netapp v-series

Hi,

I do not have a lot of experience with NetApp. From what I’ve seen, their filesystem underneath the hood looks like ZFS. They use 4K blocks for their segment size, which is pretty small. Since a lot of VMs also use 4K blocks extensively, I’d expect alignment to be of greater importance on a NetApp. You could get away with a 4K alignment, but since there is no harm in aligning to higher values I’d always design that. Windows 2008, Windows 7 align automagically at 1MB (which is also an alignment for any segment size smaller than 1MB). Personally if I had to align manually, I’d align on 64KB. That surely covers the alignment requirements for NetApp, and also for EMC and most others; I have not seen many vendors using segment size over 64KB.

[…] This time into misalignment. Theoretically it is SO easy to point out what the problem is (see Throughput part 3: Data alignment. For this new blog entry I had my mind set on showing the differences between misalignment and […]

[…] Throughput part 3: Data alignment […]

[…] a previous blogpost I covered the general issue of misalignment on a disk segment level. This is the most occurring and […]

[…] when your storage underneath does not have a lot of IOPS to spare. You can read all about it here: Throughput part 3: Data Alignment. Apart from misalignment, using a lot of snapshots on your VMs may also cause quite an impact to […]

[…] than a single segment, AND the data has been aligned (for more details on alignment see my posts Throughput part 3: Data alignment and The Elusive Miss Alignment) only a single segment will be read from a single spindle. In a […]